Azure Data Factory Event Triggers

If you’re still triggering your ETL processes on a schedule (maybe once an hour or at a set time each night), I want to tell you about a better way. In this post I’ll tell you about Event Triggers within Azure Data Factory.

In today’s world we prefer an approach where processes are initiated by the occurrence of an event. Azure Data Factory Event Triggers do this for us. Event Triggers work when a blob or file is placed into blob storage or when it’s deleted from a certain container. When you place a file in a container, that will kick off an Azure Data Factory pipeline.

These triggers use the Microsoft Event Grid technology. The Event Grid can be used for a variety of event driven processing in Azure; Azure Data Factory is using Event Grid under the covers.

It’s important to know that as of today, the Event Triggers are only triggered by the creation/deletion of a blob in a blob container but as we are using Event Grid, I’d like to think we may have the option in the future to enable them through other events.

Also, good to note is that we don’t have to use the functionality within Azure Data Factory to kick off a pipeline; we can use our own triggers. For example, we can have a Logic App that uses an Azure function and that Azure function might kick off a pipeline based on some event that happens inside our app.

If we do use our own triggers, we are outside of the framework of Azure Data Factory. The nice thing about Event Triggers is they are all managed inside the framework of Data Factory.

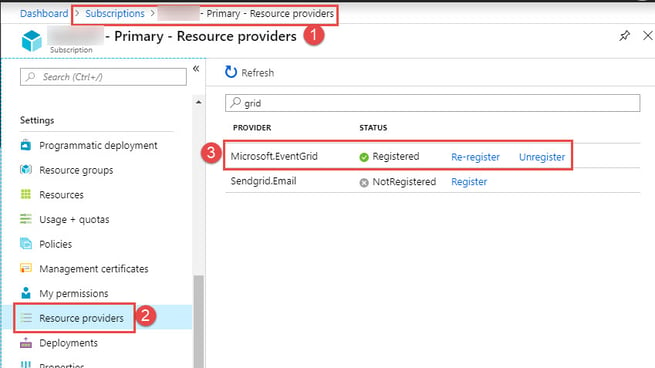

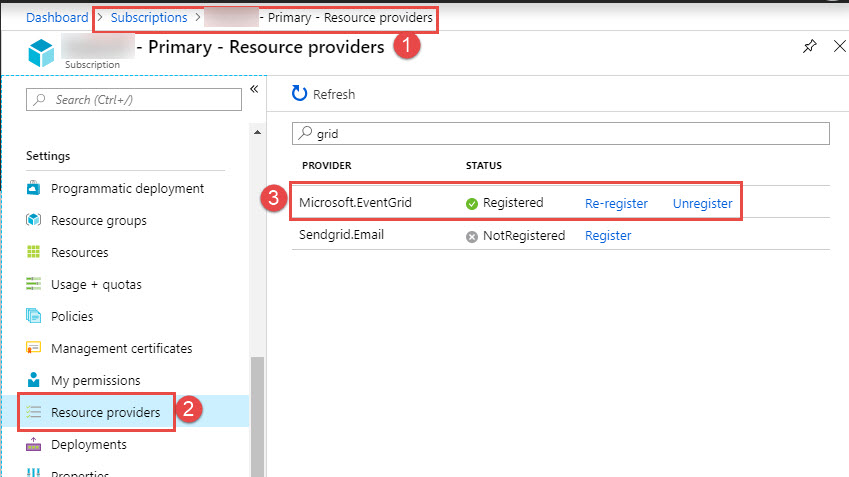

Before we can use Event Triggers, we must register the Microsoft Event Grid resource (which I show in the screenshot below):

This is a property of your subscription, so you’ll have to register that resource before you can use Event Triggers in Azure Data Factory.

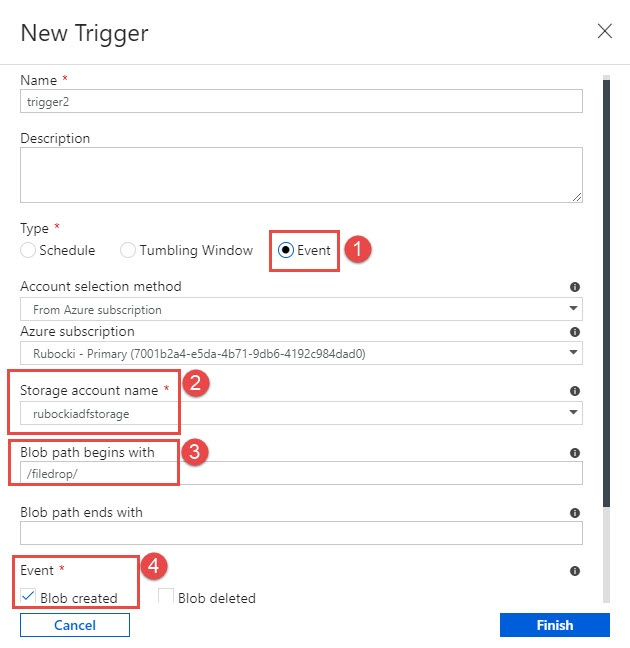

In my next screenshot shows how to create a new trigger:

We do this from the Azure Data Factory designer. When creating a trigger, we have the choice of doing a schedule, a tumbling window, but in my case, I want to choose event. Once I choose event, I need to select the storage account that we’re going to keying on and the file path or pattern that we’re going to be looking for.

So, I can set this up to say, when a file is placed in a certain container, then trigger the pipeline, for instance. Or if there’s a file that’s created that has a certain naming pattern then I can trigger the pipeline; this is all created in the designer.

In my example, you’ll see I’ve made the selection to fire a trigger when a blob is created. You can also choose to do it when a blob is deleted.

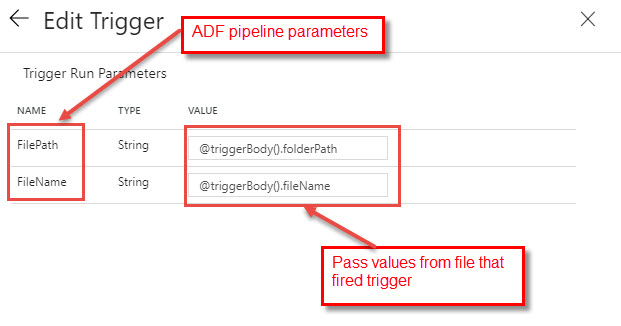

Now, that I’ve created that trigger, next what I need to do is associate my pipeline to that trigger. See below:

This works the same as we’ve done with other triggers. So, from my pipeline, I say that I want to create a trigger and then I would choose the event trigger that I created in the previous step. What’s interesting here is if I have pipeline parameters configured on my pipeline, I can take the values of the file path in the file name from the blob that was created in my example and pass those as inputs or context into my execution which is very handy and powerful.

Event Triggers are incredibly useful and give us a much better option for triggering our ETL processes. If you have any questions on Event Triggers, Azure Data Factory or data warehousing in the cloud, you’re in the right place. Click the link or contact us—we’re here to help you no matter where you are on your Azure journey.

Sign-up now and get instant access

ABOUT THE AUTHOR

Free Community Plan

On-demand learning

Most Recent

private training

.jpg?width=406&name=Eans%20YouTube%20Thumbnails%20(2).jpg)

-1.png)

Leave a comment