How to Integrate Azure DevOps within Azure Databricks

In this post in our Databricks mini-series, I’d like to talk about integrating Azure DevOps within Azure Databricks. Databricks connects easily with DevOps and requires two primary things. First is a Git, which is how we store our notebooks so we can look back and see how things have changed. The next important feature is the DevOps pipeline. The pipeline allows you to deploy notebooks to different environments.

In my video included in this post, I’ll show you how to save Databricks notebooks using Azure DevOps Git and how to deploy your notebooks using a DevOps pipeline. Be sure to check it out. Here’s a breakdown:

Saving Notebooks:

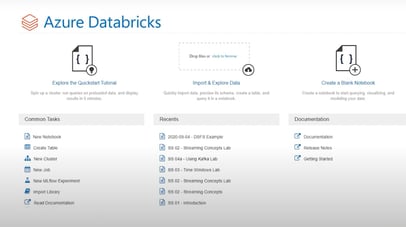

- We start by launching a workspace in our Databricks service. You’ll see that my cluster has been started.

- To get the Git integration to work, we click on our Admin Console. Once there, we click on Advanced. Be sure that the Notebook Git Versioning is enabled.

- Under User Settings, go into Git integration and choose Azure DevOps as the Git provider.

- Click on Connect to our Azure Storage Account to open any notebook. You will see a green check mark in the top left that shows our Git is synced.

- We know this is the case as we already have our DevOps account open (dev.Azure.com) and in here you have a variety of features, such as repos and pipelines.

- To create a new repo, simply click on repos and then New Repository from the menu. In my demo I have a repo created and this contains a series of files, in my case, these are notebooks. Since I’ve saved this in my DevOps account, it comes up as Git synced in my Azure Storage Account.

- If you open a notebook and see that it is not linked, then you must click where it says ‘not linked’ to get the Git preferences box. Select ‘link’ as the status, which will pre-default to your notebook link, save it and it will now show as synced. This is a nice feature for storing your database objects.

Pipelines:

- Pipelines within the DevOps framework allow you to deploy code seamlessly.

- I created a pipeline in my demo and when I open it, I click on the edit button to see the contents.

- You’ll see I created a pipeline and it uses a Windows Server that resides in Windows in a pool. When this job is started it will retrieve the next available Windows Server, do its thing, and then go back into the pool.

- Next, I click on Get Sources and select Azure Repo Git. I’m connected to the Azure Databricks repository and our master branch.

- Now I need to set up our Agent. For the DevOps we had to install a Microsoft extension (Configure Databricks CLI) as it’s not there out of the box. (See my demo for more detail on this.)

- I need to specify the workspace URL and then my access token. To get this token, go to User Settings and click Generate new token. A tip here is be sure to write down or store your token ID as once it’s gone, it’s gone!

- The next thing we need is to deploy our notebook in our workflow by clicking on that link, specify the notebooks folder and the route. To kick off this job you simply need to click on Pipeline from the menu on the left and click Run Pipeline. In my demo we are just taking our notebooks from Dev and pushing them back to Dev.

- Strategically, we could have a test, UAT and production environment. We could specify each of those server names after they are configured and push to those seamlessly, so it cuts down on the administrative tasks.

I find that the DevOps pipeline, as well as the release pipeline, is the best thing to come around in a long time. It’s a tremendous asset to have in your arsenal. We use it a lot, not just for Databricks, but for Data Factory, Key Vault Storage Account as well as other things.

Again, take a look at my demo for a detailed walkthrough of Databricks integration to Git and the DevOps pipeline. If you want to discuss more about Azure Databricks or have questions about Azure or the Power Platform, we’d love to help. Our expert team has all the knowledge and experience to show you how to integrate any Azure product or service in your organization.

Sign-up now and get instant access

Free Community Plan

On-demand learning

Most Recent

private training

.png?width=406&name=How%20to%20Test%20Dynamic%20Row-Level%20Security%20in%20Power%20BI%20(1).png)

.png?width=406&name=How%20to%20Merge%20Data%20Using%20Change%20Data%20Capture%20in%20Databricks%20(1).png)

-1.png)

Leave a comment