Azure Data Factory v2 Parameter Passing: Linked Services

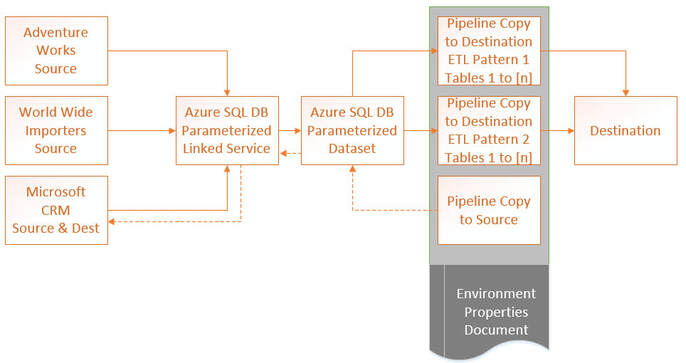

This is blog post 3 of 3 on using parameters in Azure Data Factory (ADF). Blog post #1 was about parameterizing dates and incremental loads. Blog post #2 was about table names and using a single pipeline to stage all tables in a source. Today I am talking about parameterizing linked services.

Disclaimer: Not all linked service types can be parameterized at this time. This feature only applies to eight data stores:

- Azure SQL Database

- Azure SQL Data Warehouse

- SQL Server

- Oracle

- Cosmos DB

- Amazon Redshift

- MySQL

- Azure Database for MySQL

Concept Explained:

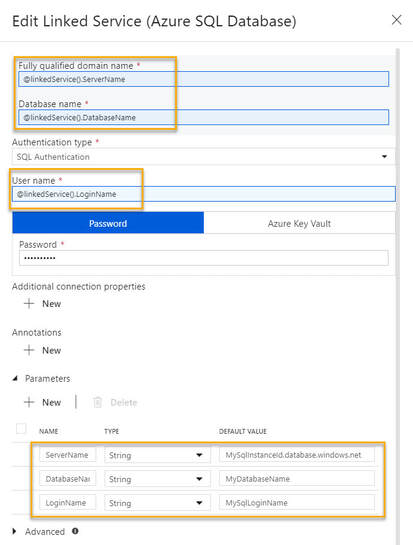

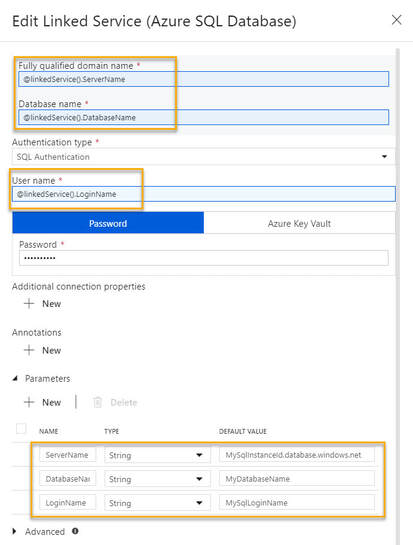

The concept is pretty straightforward and if our goal is to have one linked service per type, a parameterized Azure SQL Database linked service might look like this:

|

You can parameterize your password as well but best practice is to use Azure Key Vault.

Examples of parameter values might be: ServerName: dbradish2.database.windows.net DatabaseName: AdventureWorks LoginName: DBloaderADaccount In case the screen print is difficult to read, the dynamic content is @linkedService().CaseSensitiveParameterName |

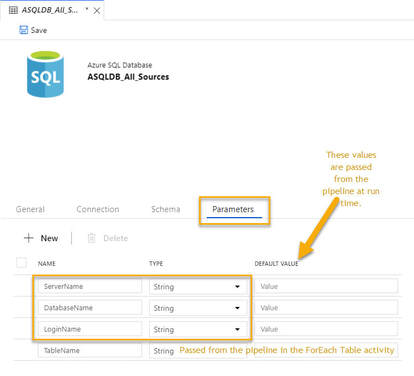

Moving on the the single dataset that uses this linked service, we have the following.

|

First add the three linked service parameters to the dataset.

The TableName dataset parameter is not used for linked services, but gets its value from the ForEach Table activity in the pipeline. |

|

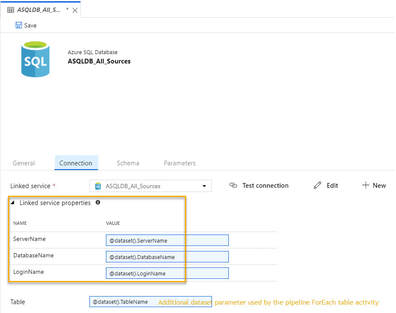

Navigating the the Connection tab of the dataset, we give the linked service properties dynamic content.

In case the screen print is difficult to read, the dynamic content is simply @dataset().DatasetParameterName |

Putting it all Together:

What we are after here is a repeatable process, simplicity of design, fewer developer hours, easier deployments and more efficient DevOps. We also want very little hard-coding of parameter values; thus the environment properties document. If we find that we have lost flexibility in our pipelines that are "doing to much" and a change impacts "too many destinations", rethink the parameters and kick them up a notch. Even with the above design, we should be able to execute the load of just one source, or just one table of a source. We should also be able to complete data-driven full and incremental loads with these same pipeline activities. Metadata ADF control tables and ADF orchestrator pipelines (pipelines that call other child pipelines and govern dependency), can also be helpful.

Final Musings:

Obviously the above pattern works best if you are staging your data into your destination, then doing your transform and load (ELT). This brings me to a growing realization that with SSIS I had turned away from transform user stored procedures (USPs) and became disciplined in using SSIS in-memory capabilities. With a recent Azure Data Warehouse project, however, I had to renew my love of USPs. So now I am, quite frankly, betwixt and between ADF parameter capabilities for staging, Databricks performance capabilities for transforms, and our old friend, the stored procedure. For everything there is an appropriate place, so once again, let's get back to the architecture diagram and figure out what works best for your unique requirements.

Final Musings:

Obviously the above pattern works best if you are staging your data into your destination, then doing your transform and load (ELT). This brings me to a growing realization that with SSIS I had turned away from transform user stored procedures (USPs) and became disciplined in using SSIS in-memory capabilities. With a recent Azure Data Warehouse project, however, I had to renew my love of USPs. So now I am, quite frankly, betwixt and between ADF parameter capabilities for staging, Databricks performance capabilities for transforms, and our old friend, the stored procedure. For everything there is an appropriate place, so once again, let's get back to the architecture diagram and figure out what works best for your unique requirements.

Need help with this or anything Azure related? Contact us to start a conversation with our Azure experts today - we can help, no matter where you are on your cloud journey.

Sign-up now and get instant access

Free Community Plan

On-demand learning

Most Recent

private training

.jpg?width=406&name=Eans%20YouTube%20Thumbnails%20(2).jpg)

-1.png)

Leave a comment