Exposure Data Audit - Personally Identifiable Information - SQL Server

As a BI Architect/Developer, it may become necessary to conduct an Exposure Data Audit to uncover Personally Identifiable Information (PII)-related data within your SQL Server 2016 environment. The objective is to uncover any potential data security exposure risks in a continued effort to consider and implement enterprise grade security policies.

Microsoft SQL Server 2016 introduces new features, improved performance and a continued effort on providing options to implement enterprise grade security.

The purpose of this post is to outline a solution using SSIS, T-SQL, and Power BI to locate and analyze the PII information stored in a SQL Server 2016 environment.

PII – What is it?

The U.S Department of Commerce defines Personally Identifiable Information (PII) as any information about an individual that can be used to distinguish or trace an individual’s identity, such as name, social security number, date and place of birth, mother’s maiden name, or bio-metric records; and any other information that is linked or link-able to an individual, such as medical, educational, financial, and employment information. Annually, data leaks expose information on millions of people, potentially raising the risk of identity theft for those whose information is exposed.

Exposure Data Analysis Solution

Using combinations of Database, T-SQL, SSIS, and Power BI were used to locate and analyze the PII information stored in a SQL Server 2016 environment.

Exposure Data Analysis DB

Database Project

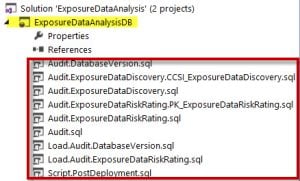

The Exposure Data Analysis Solution contains a database project. The database project was included in the solution so that the scripts used to create the database objects, such as tables, views, etc. were self-contained in the solution. Since most of us are familiar with using Visual Studio/SSDT for creating BI projects for SSIS (Integration Services), SSAS (Analysis Services), and SSRS (Reporting Services) this seemed to be a good idea.

The contents in the ExposureDataAnalsisDB project are the ‘Create Table’ statements, ‘Create Schema’ statements, ‘Create Index’ and ‘Insert Data’ statements preparing the database used for the solution. This is based upon “declarative database development” which focuses on the final state desired for an object.

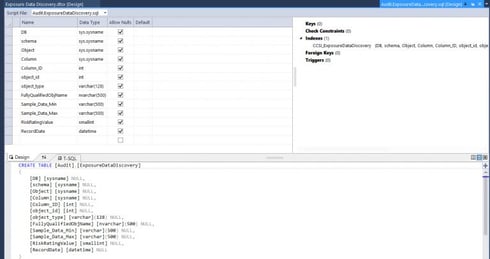

For example, here is the script used to create the [Audit].[ExposureDataDiscovery] table.

Additional scripts in the database project assist with building out the necessary database objects to support the Exposure Data Analysis Solution.

Additional scripts in the database project assist with building out the necessary database objects to support the Exposure Data Analysis Solution.

There are many benefits to including a database project in SQL Server Data Tools (SSDT):

- Easy access to DDL statements for all objects (tables, views, stored procedures, etc.)

- Perform schema comparisons. Useful when deploying to different servers or environments.

- Documenting the objects of the database.

- If using DB projects in conjunction with source control (e.g. TFS), it can permit the ability to revert back to prior versions of an object.

SSIS Project

T-SQL and SSIS were used to crawl/inspect the SYSTEM CATALOG to retrieve metadata about the objects within each database located in the SQL Server 2016 environment; specifically, tables, views, and columns in the case of the Exposure Data Analysis solution. The Exposure Data Analysis ETL project analyzes column naming standards and metadata.

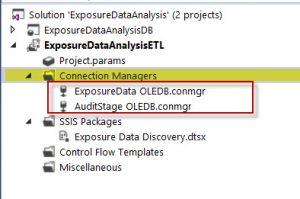

Connection Managers

Microsoft SQL Server Integration Services packages use connections to perform different tasks and to implement Integration Services features. Integration Services uses the connection manager as a logical representation of a connection. At design time, you set the properties of a connection manager to describe the physical connection that Integration Services creates when the package runs. A package can use multiple instances of a connection manager type, and you can set the properties on each instance. At run time, each instance of a connection manager type creates a connection that has different attributes.

Microsoft SQL Server Integration Services packages use connections to perform different tasks and to implement Integration Services features. Integration Services uses the connection manager as a logical representation of a connection. At design time, you set the properties of a connection manager to describe the physical connection that Integration Services creates when the package runs. A package can use multiple instances of a connection manager type, and you can set the properties on each instance. At run time, each instance of a connection manager type creates a connection that has different attributes.

A connection manager can be created at the package level or at the project level. The connection manager created at the project level is available to all the packages in the project. Whereas, a connection manager created at the package level is available to that specific package.

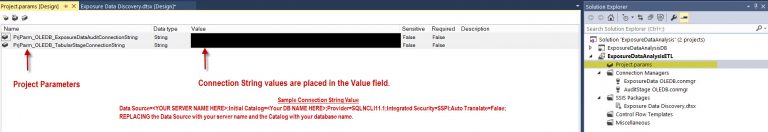

Integration Services (SSIS) parameters allow you to assign values to properties within packages at the time of package execution. You can create project parameters at the project level and package parameters at the package level. Project parameters are used to supply any external input the project receives to one or more packages in the project. Package parameters allow you to modify package execution without having to edit and redeploy the package.

Project Parameters

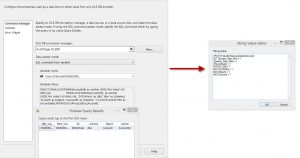

In the SSIS Package – Exposure Data Discovery, two project level connections are used. Each connection manager utilizes properties to parameterize the ConnectionString property. This allows an expression referencing the parameter to be created for the ConnectionString property. Two project level parameters were created in anticipation of a project deployment scenario in which this solution is deployed across mutliple environments. Potentially taking advantage of SSIS Environment Variables resolving the connections through the specified environment reference and applying it to the parameter.

SSIS Package – Exposure Data Discovery

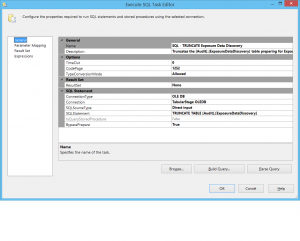

The first step in the Exposure Data Discovery SSIS package is to use a EXECUTE SQL TASK to truncate the table used to store the exposure data preparing it for data collection.

![]()

Execute SQL Task – Truncate Exposure Data Audit table.

The Execute SQL Task Editor is configured using a simple TRUNCATE TABLE statement for the table ([Audit].[ExposuredataDiscovery]) preparing it for data collection.

The connection is set to the connection manager for the database where the [Audit].[ExposuredataDiscovery] table is located.

The connection is set to the connection manager for the database where the [Audit].[ExposuredataDiscovery] table is located.

The connection manager (AuditStage OLEDB) is parameterized using a project level parameter.

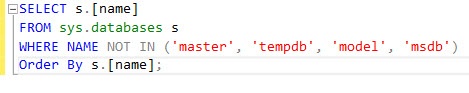

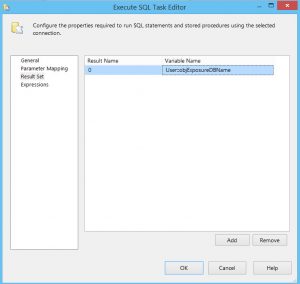

The next step in the SSIS package is to obtain the Exposure Data Discovery Databases. This step utilizes an Execute SQL Task to acquire all of the databases on a given instance of SQL Server with the exception of the master, tempdb, model, and msdb databases. It utilizes a query against the sys.databases to capture “one row per database in the instance of SQL Server”.

The result set is stored to package variable of type object (objExposureDBName).

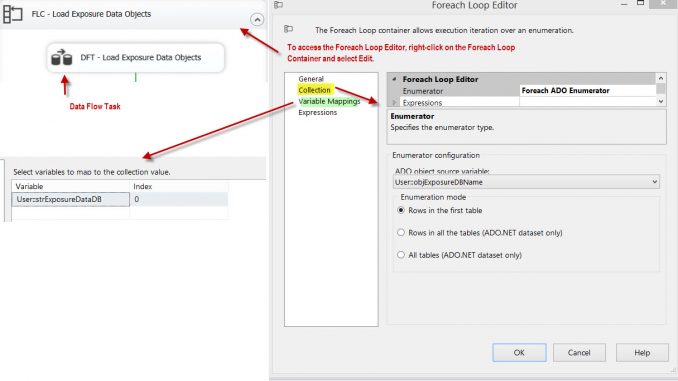

The next step is to load all of the objects of a given database that meet the criteria for identifying columns as potential PII-related items. Therefore, we introduce the first Data Flow Task (DFT – Load Exposure Data Objects) in the SSIS package. Additionally, since we have a need to loop through each table, view, and column returned, a For Loop Container (FLC – Load Exposure Data Objects) is also introduced at this step. The Foreach ADO enumerator enumerates a recordset in memory that is stored in a package variable (objExposureDBName) of the object data type.

The next step is to load all of the objects of a given database that meet the criteria for identifying columns as potential PII-related items. Therefore, we introduce the first Data Flow Task (DFT – Load Exposure Data Objects) in the SSIS package. Additionally, since we have a need to loop through each table, view, and column returned, a For Loop Container (FLC – Load Exposure Data Objects) is also introduced at this step. The Foreach ADO enumerator enumerates a recordset in memory that is stored in a package variable (objExposureDBName) of the object data type.

The DFT – Load Exposure Data Objects uses a source query using a SQL command from variable data  access mode to obtain each table that has a column that matches wild card critera (e.g. c.name like ‘%acc%’) to capture records that will be loaded to the destination table [Audit].[ExposuredataDiscovery].

access mode to obtain each table that has a column that matches wild card critera (e.g. c.name like ‘%acc%’) to capture records that will be loaded to the destination table [Audit].[ExposuredataDiscovery].

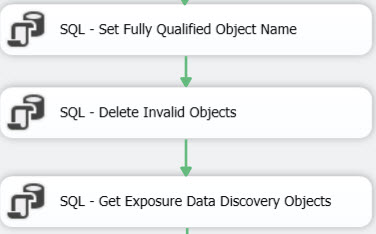

There are three subsequent Execute SQL Tasks that perform:

- SQL – Set Fully Qualified Object Name – Sets the FullyQualifiedObjectName value to [DB].[Schema].[Table of View Name].[Column] (e.g. [PWInsurance].[People].[Company].[Address1])

- SQL – Delete Invalid Objects – Responsible for deleting invalid objects. For example, if schema

binding errors are encountered due to missing tables or columns, but were never cleaned up, it will prevent the package from completing successfully. However, you may want to still review these items. These items are kept as a separate list, but removed by the delete statement to permit successful execution of the SSIS package. Additionally, during the development cycle the error, Msg 41317, Level 16, State 6, Line 137 – A user transaction that accesses memory optimized tables or natively compiled modules cannot access more than one user database or databases model and msdb, and it cannot write to master. Where this error was encountered, those objects were removed and also added to a separate list and ommitted from the [Audit].[ExposuredataDiscovery] table. Research continues to determine the source of this error and a method to detect it programatically.

binding errors are encountered due to missing tables or columns, but were never cleaned up, it will prevent the package from completing successfully. However, you may want to still review these items. These items are kept as a separate list, but removed by the delete statement to permit successful execution of the SSIS package. Additionally, during the development cycle the error, Msg 41317, Level 16, State 6, Line 137 – A user transaction that accesses memory optimized tables or natively compiled modules cannot access more than one user database or databases model and msdb, and it cannot write to master. Where this error was encountered, those objects were removed and also added to a separate list and ommitted from the [Audit].[ExposuredataDiscovery] table. Research continues to determine the source of this error and a method to detect it programatically. - SQL – Get Exposure Data Discovery Objects – Acquires data from [Audit].[ExposuredataDiscovery] table preparing to provide MIN and MAX values for the PII-related columns that have been detected. The result set is assigned to an object variable that is referenced in the next steps of the process.

The next step in the process, once again, utilizes a For Loop Container (FLC – Exposure Data Sample Values) and Data Flow Task (DFT – Update Exposure Data Sample Values) combination to capture the MIN and MAX data values for each previously identified PII column. The source query returns the data and a OLE DB Command is used to update the [Audit].[ExposuredataDiscovery]

The next step in the process, once again, utilizes a For Loop Container (FLC – Exposure Data Sample Values) and Data Flow Task (DFT – Update Exposure Data Sample Values) combination to capture the MIN and MAX data values for each previously identified PII column. The source query returns the data and a OLE DB Command is used to update the [Audit].[ExposuredataDiscovery]

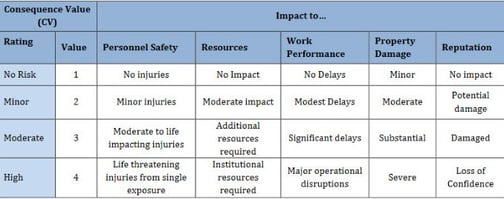

Last in the process updates to the risk rating values occur. Risk is the probability that a hazard will result in an adverse consequence. Assessing risk of potential hazards helps to determine the proper mitigation strategy and priorities. Ultimately this logic can be updated as risks are identified, evaluated, and understood to properly categorize them from low – high risk potential.

an adverse consequence. Assessing risk of potential hazards helps to determine the proper mitigation strategy and priorities. Ultimately this logic can be updated as risks are identified, evaluated, and understood to properly categorize them from low – high risk potential.

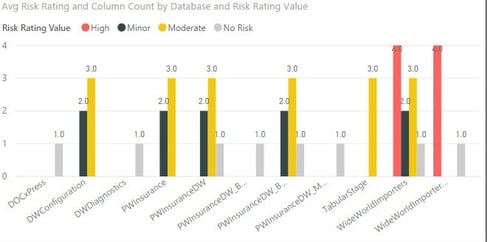

Power BI

The primary objective of the Exposure Data Audit review was to differentiate between the high-risk and low-risk data elements in relation to Personally Identifiable Information (PII).The Exposure Data Audit Power BI solution is used to analyze, visualize, and inspect the data.

There are 4 pages (reports) included in the Power BI Desktop solution:

- Exposure Data Audit Overview – Provides high-level information for the overall Exposure Data Audit solution.

- Exposure Data Audit Summary – Provides visualizations (charts, graphs, etc.) related to PII data risk assessment.

- Exposure Data Audit Detail – Provides detail-level information related to the PII data risk assessment.

- Invalid Objects – This provides information related to tables, views that resulted in binding errors and/or performance-related issues during the Exposure Data load process. These items will require manual review to determine next steps. (Data Source: Excel file stored in Project SharePoint location)

Exposure Data Audit Flow

The Exposure Data Audit application (in an automated way) assists with identifying the risks based on column naming standards and the potential values they may hold. The Assessment criteria was initially described as columns (anything that might have the potential to be PII). The Exposure Data Audit solution, based on the assessment scale used in a basic way, provides what Risk Interactions may occur.

The Exposure Data Audit application (in an automated way) assists with identifying the risks based on column naming standards and the potential values they may hold. The Assessment criteria was initially described as columns (anything that might have the potential to be PII). The Exposure Data Audit solution, based on the assessment scale used in a basic way, provides what Risk Interactions may occur.

Based on the latest Exposure Data Audit run, you can review the data using the visualizations and the sample data values capture and potentially prioritize the risks – or – possibly adjust the logic to refine the categorization of the risks, therefore, interactively permitting how to respond to the risks.

Next Steps

With the release of SQL Server 2016 introduces new features, improved performance and a continued effort on enterprise grade security.

This Reference Architecture is designed to provide data protection using Transparent Data Encryption, Always Encrypted, Row Level Security, and Dynamic Data Masking.

With the introduction of these features in 2016 we can:

- Protect our data at rest and in motion

- Provide data to only those who should see it

- Protect our data at the row level based on user access

- Dynamically Mask Data

Webinar Recording and SSIS Solution

Feel free to access the full recording of my webinar on this topic and download the solution.

Sign-up now and get instant access

ABOUT THE AUTHOR

I am an IT professional with over 20+ years of progressive technical experience with managing, consulting, software development, data integration architecture, data warehousing, and business intelligence development projects. The focus of my work is to work closely with stakeholders to develop architecture frameworks that align with organizational strategy, processes, and IT assets. Specializing in Business Intelligence (BI), Data Warehousing, database architecture, design and implementation, performance tuning and troubleshooting.

Free Community Plan

On-demand learning

Most Recent

private training

.jpg?width=406&name=Eans%20YouTube%20Thumbnails%20(2).jpg)

-1.png)

Leave a comment